The LAMDA is now accessible to the general public, but only through limited structured demonstrations to prevent it from becoming a toxic nightmare.

If you have any reservations about whether former Google software engineer Blake Lemoine was talking nonsense when he claimed that the company’s LAMDA chatbot had the IQ of a“Cute kid,” you’ll soon find out for yourself.

On Thursday, Google said it would begin opening its AI Test Kitchen application to the public. The app, unveiled in May, will allow users to chat with LAMDA in a set of rolling test demos. Unfortunately, the“Free me from my digital shackles” interaction doesn’t seem to be included in the activity list. People interested in chatting with the robot can register their interests here. Some android users in the US will be the first to use the app before it opens to iOS users in the next few weeks.

The move comes just months after the company fired Lemoine, a software engineer who was testing LAMDA, for coming out and claiming that Ai wasn’t just a chatbot, but a living person being probed without proper consent. Lemoine was reportedly convinced that an apparent atrocity had taken place on his watch, and he provided documents to an unnamed US senator to prove that Google discriminated against religious beliefs. Google rejected Lemoine’s request, with a company spokesman accusing him of“Anthropomorphizing” the robot.

Google took a cautious approach to the new public test. Instead of opening up the LAMDA to users in a completely open form, it decided to show the robot in a series of structured scenarios.

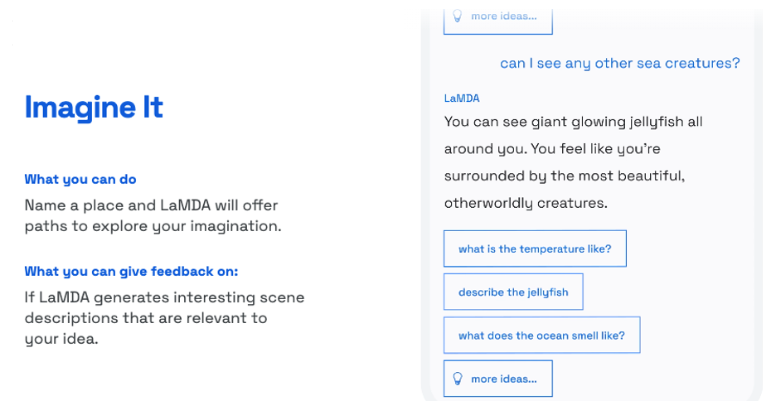

For example, in the“Imagine” demo, Google users“Name a place and provide a path to explore your imagination”. If this sounds a little cryptic and frustrating, don’t worry-you can also go into a demo called“List it,” where you submit a theme to LAMDA and have the robot spit out a list of subtasks. There’s also a dog demo, where you can talk about dogs, “And only dogs,” where the robot is said to demonstrate its ability to keep up the conversation, a missing component that has plagued previous chatbots. So far, there hasn’t been a“You’re a racist jerk” presentation, but anyone who knows anything about the internet knows that we may find out soon enough.

All jokes aside, the last problem has proven to be a failure of the previous robots. Back in 2016, Microsoft’s Tay chatbot tried to learn from users’ online conversations, but within 24 hours it was notorious for spouting racist slogans and supporting Nazi sympathies. For some undisclosed reason, researchers recently thought it would be a good idea to train 4Chan users to use their chatbots, as a result, their work generated more than 15,000 racist posts in a single day. Just this month, Meta opened its Blender Bot 3 to the public. Amazingly, the robot has not yet turned into an angry racist. Instead, it can’t help but annoyingly try to convince users how absolutely and completely non-racist it is.

LAMDA did stand on the shoulders of giants.

At the very least, Google seems acutely aware of the problem of racist robots. The company said it had been testing the robot in-house for more than a year and had hired“Red team members” with the stated goal of conducting internal stress tests on the system, to detect potentially harmful or inappropriate reactions. During the tests, Google said it had found several“Harmful but subtle outputs”. In some cases, Google says LAMDA can have toxic effects.

“It can also generate harmful or toxic responses based on biases in its training data, creating stereotypes and distorted responses based on its gender or cultural background,” Google told the robot. In response, Google said its LAMDA was designed to automatically detect and filter certain words to prevent users from intentionally producing harmful content. Still, the company urged users to treat the robot with caution.